Don't die in the sandbox

There’s something happening at the edge of software and language. We’re watching the no-code movement collide with foundation models, and the result is a new generation of builders who don’t write code but ship software anyway.

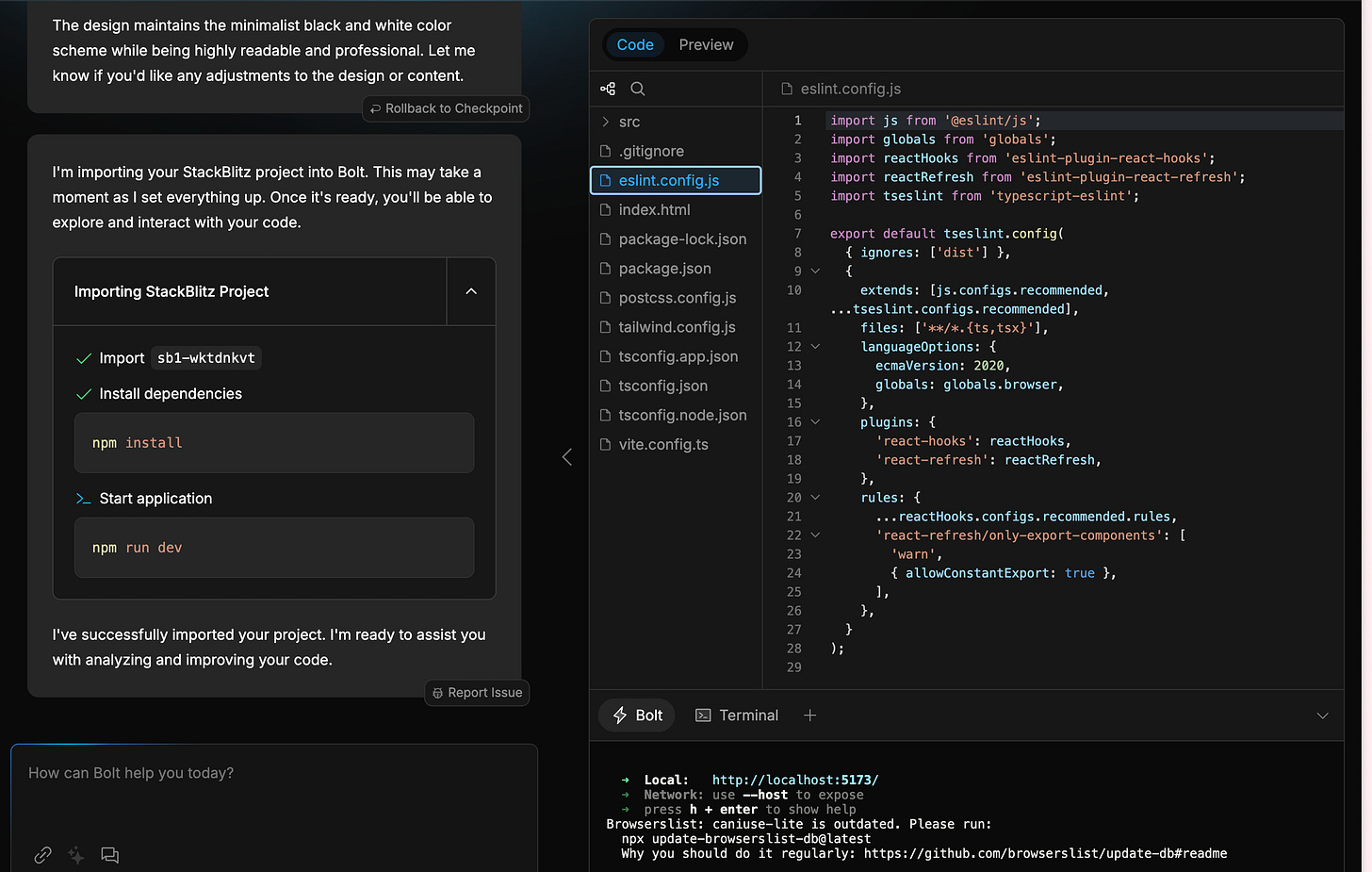

At the center of this shift are tools like Replit’s AI agent, Bolt.new, and Lovable. Each of them does something quietly radical: they let you describe a product idea in natural language and then go out and scaffold the whole thing for you. Pages, buttons, databases, even logic. It all emerges from a few sentences.

This isn't your classic drag-and-drop no-code. It's not about stitching together blocks or templates. This is something closer to intent-matching. You say what you want, and the system handles everything else. It's smoother, more expressive, and significantly faster than anything before it. Which is why it's spreading so quickly.

It feels democratizing. And in some ways, it is. These tools are unlocking access for people who were previously shut out of software creation. If you have an idea and a rough sense of what it should do, you can now make it real, without waiting on a developer, learning React, or navigating AWS permissions.

Perhaps I’m a bit more cynical than most though, but I don’t think people are as creative as we like to imagine. Most people don’t walk around with a backlog of app ideas waiting to be built. When given a blank canvas and full flexibility, they don’t explode with possibilities. They stall.

This is the paradox of natural language interfaces: they’re freeing, but also directionless. If your product doesn’t connect to anything grounded, no live data, no existing workflow, no real source of friction, it often dies in the sandbox. A calendar visualizer with no events. A CRM for a non-existent client list. A tool with nowhere to plug in. Today Replit, Bolt.new, Lovable do NOT connect into real internal data repositories.

The apps that actually get built and used tend to have a different characteristic: they’re anchored. They integrate. In many cases, they don’t ask you to invent something from scratch, they ask you to improve something that already exists.

That’s why the next real frontier in this space isn’t just better LLM prompting or faster app generation. It’s context. Specifically, the ability to connect to your real systems: your email, your calendar, your Notion docs, your Salesforce instance. Your working memory.

When these no-code tools can listen to what’s happening in your world, not just parse your prompt, but understand your state, they stop being toy builders and start becoming infrastructure. You won’t just build something interesting. You’ll build something useful.

And the creativity? It shows up the moment people stop staring at a blank screen and start responding to something real: a sales workflow that’s broken, a client onboarding experience that’s clunky, a process they’ve duct-taped together in spreadsheets for the last six months.

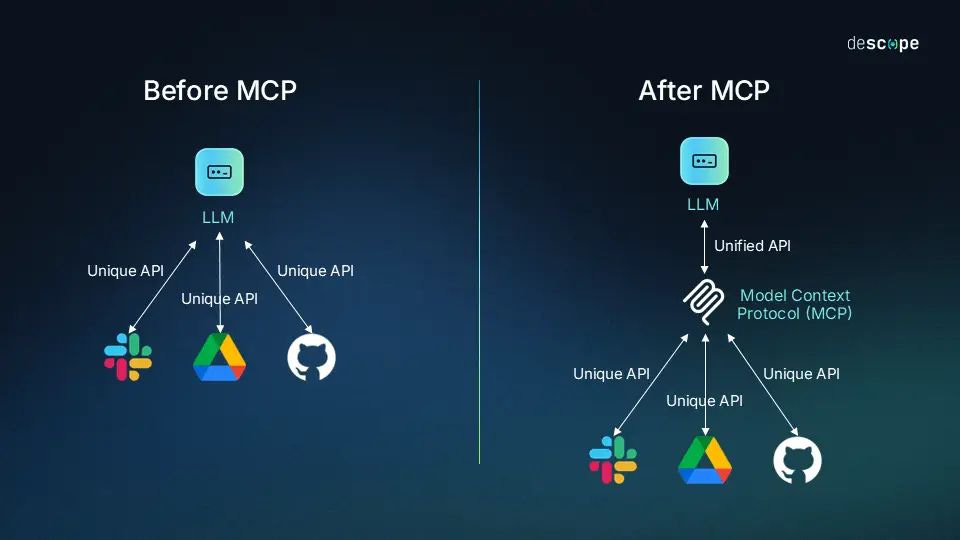

This is where the concept of model context protocol (MCP) comes into play. At its core, MCP represents a standardized way for language models to access, interpret, and operate on personal or organizational data. Think of it as the connective tissue between a user’s intent and their actual digital footprint. Instead of prompting a model in isolation, users interact with a system that understands their calendar, their task list, their docs, and their team, not through brittle API integrations, but through a shared contextual substrate. The result is software that feels less like a guessing engine and more like a true collaborator.

A good analogy for model context protocols is HTTP itself. Just as HTTP standardized how browsers communicate with web servers, MCP aims to standardize how AI systems access and operate on user data across tools and platforms. Before HTTP, the web was fragmented and inaccessible to most people. After HTTP, it became composable and interactive. MCP has the potential to do the same for intelligent software creation, turning context from a hidden constraint into a shared interface

HTTP is what allows your browser (like Chrome or Safari) to talk to a website. When you type in a URL and hit enter, your browser sends an HTTP request to a server. That server responds with the webpage (HTML, CSS, images, etc.), and your browser displays it

In many ways, context protocols are the missing ingredient that elevate AI-native no-code tools from novelty to necessity. They don’t just make the tools more powerful, they make them trustworthy. When your app knows what project you’re working on, which clients are active, or what emails you haven’t responded to, the outputs feel intuitive, even prescient. This doesn’t just unlock new capabilities; it compresses the distance between idea and execution, because the scaffolding isn’t generic, it’s yours.

As these protocols mature, we’ll start to see a new class of applications emerge: deeply personal, auto-updating, and aware. They won’t just be built faster, they’ll age better, adapting in real time to your workflows and evolving alongside your needs. And the line between building software and shaping your environment will blur entirely.

This shift won’t make everyone a founder. But it will make a lot more people into builders, especially once the tools stop starting from zero.

That’s the shift that keeps me up at night: not just easier creation, but more contextualized creation. Fewer toy apps. More tools that actually get used.